- Do I Need a Car Accident Attorney?

- How Our Contingency Fees Work

- Starting a Free Car Accident Lawsuit Consultation

- Compensation After a Car Accident

- Common Causes of Car Accidents

- Common Types of Car Accidents

- Common Car Accident Injuries

- How Can I Reduce The Risk Of Having An Auto Accident?

- FAQs About Car Accidents

Do I Need a Car Accident Lawyer?

Obtaining the compensation you deserve after a car accident is dependent on gathering evidence at a crash site and using it to build a strong case in your favor. To do this, a car accident law firm or their investigators use various techniques such as accident reconstruction to assign liability.

A car accident injury lawyer may also obtain traffic surveillance or business establishment videos that capture the crash in real-time. They will also review the police report to look for mistakes that could have an undesirable effect on the outcome of your case.

Collecting Evidence for Your Case

Other actions a car accident attorney takes to provide admissible evidence to the court or insurance company are:

- Interviewing witnesses who can provide valuable information and may have used their cell phones to video the accident

- Take depositions from the other people involved in the accident and accompany you for your deposition if the other attorney wishes to take one

- Obtain and review your medical records to prove the extent of your injuries

- Calculate damages, both economic and non-economic

- Talk to the insurance company in your stead

- Negotiate with the insurer concerning the amount of the settlement

Negotiating Your Car Accident Settlement

Negotiation is an important part of the lawsuit process, and the insurer needs to know that the car accident lawyers at Wilshire Law Firm will not accept a lowball figure, something that is a common practice at the beginning of negotiations. Instead, our experienced team has a good idea about what you should receive and works to obtain it.

The bottom line is you can go it alone. However, it would place you in a disadvantageous position since the defendant or at-fault driver is lawyered up. So is the insurance company.

Retaining an attorney or scheduling a free case review provides needed legal advice. This is vital since accepting a settlement that does not cover damages can be financially devastating. Remember, once you accept an insurance company’s offer and sign a waiver that you no longer hold them liable, you are on your own even if additional medical care is necessary.

We Use Contingency Fees for Your Car Accident Lawsuit

Our Zero Fee Guarantee means our clients aren’t charged a cent in legal fees unless we make a recovery. There will be no hidden conditions and no hidden fees for your personal injury case!

A contingency fee is a type of payment a lawyer receives for their work in a personal injury lawsuit. It differs from a fixed fee and is based on a percentage of the amount the client receives. It comes with several caveats that are good for the client who may be out of work and trying to recover. If the case is not resolved in the client’s favor, the law firm does not receive a fee for their labor.

LEARN MORE: Contingency Fees

Free Consultation with Our Car Accident Law Firm

Wilshire Law Firm offers a free review of your car accident injury case. Accepting this offer to meet with our experienced legal team puts you under no obligation to retain our firm. Rather, it provides a chance to get to know our lawyers, ask questions about your claim and confidentially discuss options going forward.

Although the Wilshire Law Firm would be honored to help you, we understand that it is critical that our clients feel comfortable with our representation. Setting up a free confidential consultation concerning a claim gives our prospective clients a chance to determine if we are a good fit. It also helps them on their journey to receive the compensation they deserve.

Compensation After a Car Accident

If you have been injured in a car accident that was caused by someone else’s negligence or recklessness, you should look for an experienced car accident lawyer. Only a skilled negotiator and litigator will be able to get you maximum monetary compensation for your losses.

The actual cost of a seemingly minor accident can quickly become expensive. Car accident injuries can take months, even years, to fully heal, while lost wages and other expenses can begin to pile up.

If you were injured in a crash caused by someone else, you may be entitled to the following compensation:

- Specific damages – Past and future medical bills, lost wages, lost earning potential, necessary home or vehicle modifications, repair / replacement services such as childcare or home and vehicle maintenance, etc.

- General damages – Physical pain and suffering, loss of consortium, emotional distress, etc.

At Wilshire Law Firm, we have recovered over $1 billion in damages for our injured clients. Get started with us today. Call us 24/7 for a free no-obligation case review.

Identifying and Suing Parties Involved in Car Accident Lawsuit

Identifying the liable party or parties and proving their fault is the most important key to successfully proving your car accident claim. This is where it’s essential to work with a top auto accident lawyer, as it can be difficult to either prove liability as well as the full extent of the damages that occurred.

Depending on your personal injury case, many parties may be found liable for the damages you have suffered, including:

- Other drivers

- Vehicle owners

- Driver employers (if a driver was on the clock)

- Manufacturers / shipping companies / retailers / marketers

Determining Fault in Car Accident Cases

One complicating factor in a car accident claim is determining what happens if you were partially at fault for your accident. California deals with this scenario through a system called Pure Comparative Fault. Also known as Pure Comparative Negligence, this system reduces the compensation awarded to car accident victims by the degree of their fault in the accident.

For example, if you are determined to be 20% at fault for an accident, then the total compensation awarded to you will be reduced by 20%. Call an auto accident lawyer to get clarification on Pure Comparative Fault.

Dealing with Uninsured and Underinsured Drivers

If the at-fault driver doesn’t have car insurance, this may also impact your case. Unfortunately, there are many drivers here in California who are either uninsured or underinsured. Some insurance industry studies place the percentage of uninsured drivers in California at more than 15%.

Due to the high number of uninsured drivers, it’s recommended that you carry uninsured motorist insurance. With this coverage, if you’re hit by an uninsured or underinsured driver, you can recover damages directly from your insurer instead of filing a claim with a third party. Without underinsured / uninsured motorist coverage, you might not be able to recover any damages that result from your accident.

Top Causes of Car Accidents

With features like autonomous braking, lane-keep assist, and forward-collision warning hitting the market over the past two decades, it’s clear that car manufacturers are investing in technologies that prevent accidents and keep drivers and passengers safer. Even though vehicles might technically be safer now, driving a car is still quite dangerous.

Driver error is a common cause of car accidents. Car accidents tend to take place for the following reasons:

- Distracted Driving – Distractions are everywhere. Cell phones, conversations with passengers, or even changing the radio station can lead to a car accident.

- Driving while intoxicated – Fatal crashes involving an alcohol-impaired driver account for about 30 percent of all traffic fatalities. [1]

- Recklessness – Speeding contributes to more one third of all fatalities. [2]

- Poor/Unsafe Road Conditions – Strong rains and stiff wind can play a part in vehicle accidents. Cars may hydroplane, slip out of control, or suddenly veer into another vehicle’s path due to adverse conditions. Furthermore, roads that are not adequately maintained by government authorities can contribute to car accidents.

- Mechanical Defect – When a car is designed, built, or sold with defective parts or not maintained properly, an auto accident can occur due to a mechanical malfunction.

Regardless of the cause, we have a proven team of auto accident attorneys who can help you. Contact a personal injury lawyer from Wilshire Law firm today to discuss the cause of your vehicle accident, and to find out if you have a legitimate car accident case.

Most Common Types of Car Accidents

Here are some of the most common types of motor vehicle accidents:

- Head-on collisions

- Rear-end collisions

- Side-impact or T-bone collisions

- Sideswipe accidents

- Low-impact collisions

- Single-car accidents

- Pile-up / multi-car accidents

- Left-turn accidents

- Intersection accidents

- Merging accidents

- Failure to yield accidents

- Rollover accidents

- Motorcycle accidents

- Hit and run accidents

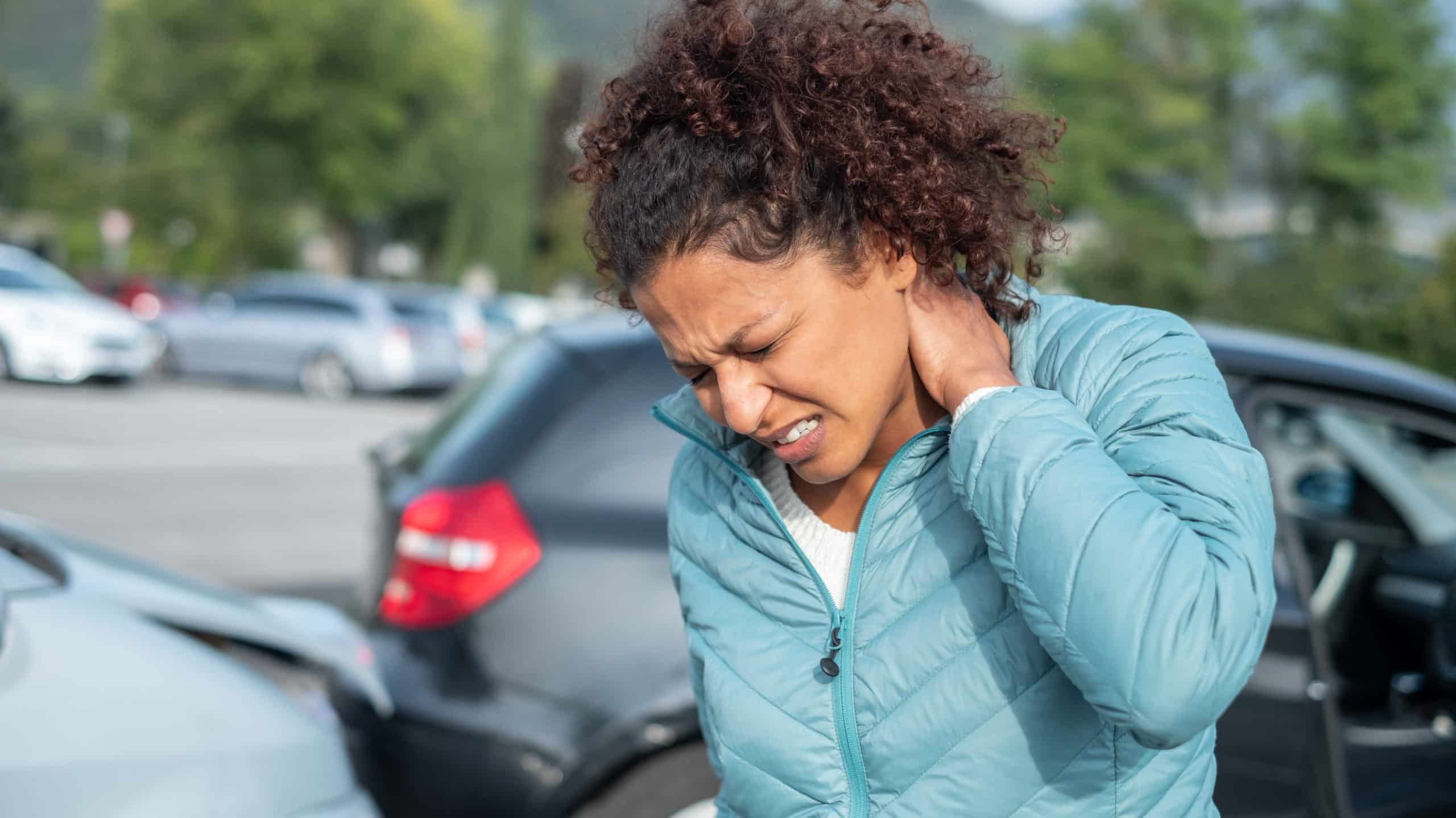

Following a severe car crash, accident victims usually deal with vehicle damage and property loss. However, the damages tend to be much more severe than that. Catastrophic injuries can alter a car accident victim’s life forever.

Most Common Injuries from Car Accidents

In 2016, nearly 3.2 million people were injured in motor vehicle crashes, according to the National Highway and Traffic Safety Administration (NHTSA). Even low-impact and low-speed accidents can cause serious injuries.

Some of the most common car accident injuries include:

- Whiplash

- Spinal cord injury

- Traumatic brain injury (TBI)

- Internal bleeding

- Broken bone(s)

- Amputation

- Blunt Force Trauma

- Coma

- Sternum & Chest Injuries

Many car accident victims may not immediately feel any injuries after an accident. This is due to the adrenaline rush that most accident victims experience. If you have been involved in a car accident, it’s essential to seek prompt medical attention to determine the true extent of your injuries.

A car crash attorney at Wilshire Law Firm, along with our extensive network of medical, investigative, and other professionals, can help put accident victims on their path to recovery. You and your family deserve the best legal representation possible for your car accident injury, and this where our auto accident lawyers can help!

How Can I Reduce The Risk Of Having An Auto Accident?

There are precautions drivers can take to reduce their risk of getting into an accident. If you’re looking to keep yourself and everyone else safer on the road, consider the following.

- Keep your vehicle roadworthy. Not keeping up with your car’s maintenance schedule can lead to big trouble down the line. You should also use the NHTSA’s to verify that your vehicle has no known safety issues.

- Avoid drugs and alcohol. Just like the cars they ride in, drivers need to remain roadworthy, too. Drivers should be well-rested and in “good driving condition” before getting behind the wheel, and that includes being sober.

- Be a defensive driver. Drivers should be aware of those around them. They should watch out for other vehicles that are speeding, maintain a safe distance from other cars, and look out for any potential safety hazards on the road.

- Keep your eyes on the road. Drivers should turn off their cell phones, lower the radio volume, and remove any other distractions that may prevent them from focusing on the road.

Keep in mind that even if you are careful, you can still be the victim of a car accident. If you are, make sure to call an experienced car accident law firm who can help you file a personal injury claim and secure compensation for your losses.

Frequently Asked Questions About Car Accidents

What Is The Statute Of Limitations On A Car Accident?

In most cases, the statute of limitations for filing a personal injury claim is two years from the accident date. Still, many complicating factors can either shorten or lengthen the time that a victim has to file a claim following an accident. To protect your claim, you should retain the services of the best car accident lawyer you can find to ensure that you receive the maximum compensation you deserve.

What Should I Do After an Automobile Accident?

If you have been involved in a car accident, we recommend taking the following steps:

- Don’t panic.

- Check for injuries.

- Call the police.

- Exchange contact and insurance information with the other driver(s).

- Begin collecting evidence.

- Consult with the car accident lawyers at Wilshire Law Firm.

A dedicated car accident attorney can be the difference between winning or losing your case!

How Can An Experienced Car Accident Lawyer At Wilshire Law Firm Help?

After a car accident, dealing with the other party’s insurance company can make all the difference between a successful claim and an unsuccessful one. A car crash attorney at Wilshire Law Firm will use their extensive experience dealing with insurance companies to get you the best settlement possible in your case. We know all the tricks insurance companies use to minimize their payouts, and we have developed proven legal strategies for getting our clients paid.

With more than one billion dollars recovered for our clients, our compassionate, client-first approach is proven to get results. To find out how we can help you, contact Wilshire Law Firm today at (800) 501-3011 or fill out our online contact form.

Our Los Angeles Office Location

Wilshire Law Firm

Los Angeles

3055 Wilshire Blvd., 12th Floor

Los Angeles, CA 90010

Tel: (213) 335-2402

Open Mon – Sun

Available 24 Hours

More Helpful Resources from Wilshire Law Firm

- What To Do After a Car Accident

- How To Describe a Car Accident – Lawsuit Guidance & Insurance Reporting

- How to Deal With Insurance Companies

- How to Hire an Auto Accident Lawyer

- The Dangers of Negligent Car Repairs

- Type of Car Accident Defenses and Claims in California

- What Happens at a Car Accident Mediation?

- What If I Get Into an Accident Without Insurance?

- What If I’m at Fault in a Car Accident?

- California Car Accident FAQ